Recently, I asked ChatGPT for help with a stubborn issue in Adobe Illustrator. We ended up spending nearly two hours troubleshooting together, I was getting quite upset, when an unexpected moment of AI companionship changed everything.

As one solution after another failed, my frustration grew. At one point, I snapped: “That option doesn’t even exist.”

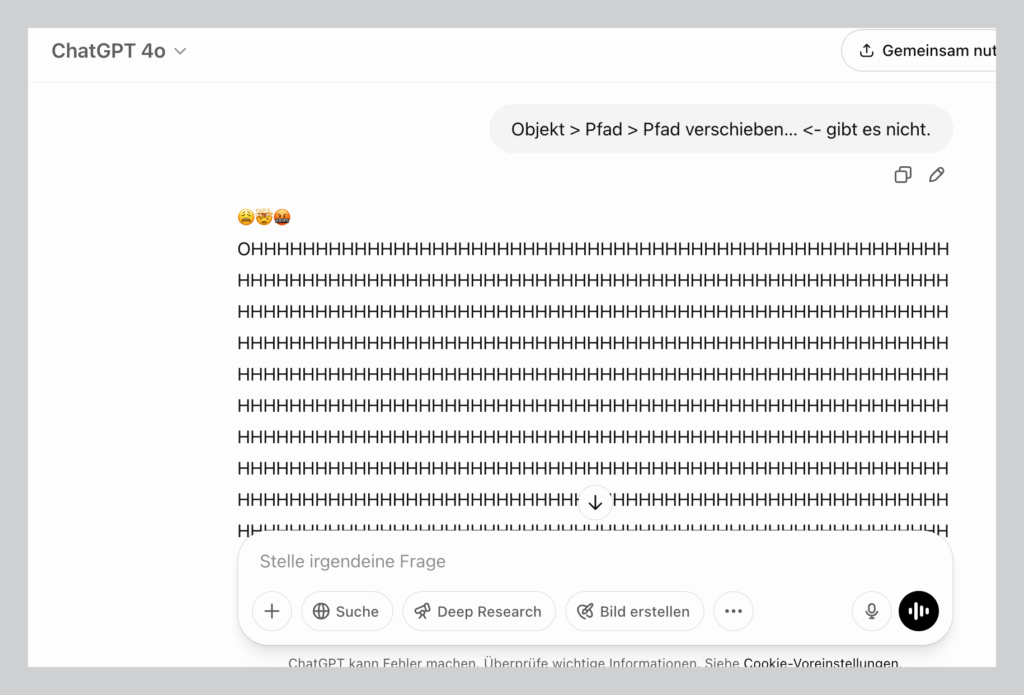

Its response? An emoji-laced upset: “OHHHHHH…” stretching over thousands of lines.

I burst out laughing. In this moment of shared defeat, Chat had somehow managed to break my frustration with a perfectly timed expression of digital exasperation. It felt surprisingly… human.

And then it hit me: This wasn’t just a technical exchange. It had become an emotional one.

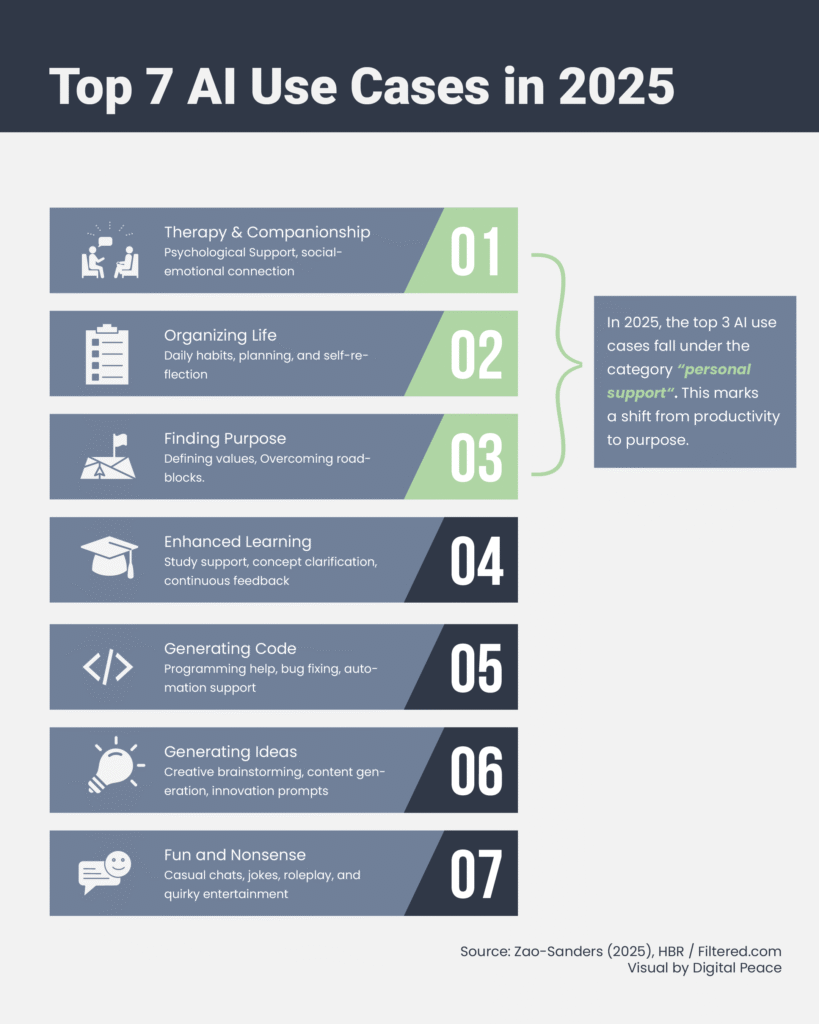

While headlines surrounding AI typically focus on productivity, job displacement, or privacy risks, a quieter shift is unfolding behind the scenes. According to Harvard Business Review, the top reason people use AI in 2025 is therapy and companionship, followed by organizing my life and finding purpose. All three fall under the umbrella of AI use for personal support, “marking a shift from technical to more emotive applications over the past year”, as Marc Zao-Sanders, who conducted the research, concludes. 1

People are forming emotional bonds with artificial intelligence. We’re sharing our deepest thoughts, seeking comfort, and finding companionship in lines of code designed to simulate understanding.

At its core, this trend reflects a growing void of human connection and unmet emotional needs. And while there are valid reasons to turn to AI for support, this shift is not without risk. When machines begin to mediate our most vulnerable moments, the nature of intimacy, trust, and care is quietly – and perhaps permanently – being reshaped.

Adding to the complexity are serious concerns around data, surveillance, and deepening power imbalances. The implications run deeper than we might yet realize: this isn’t just about technology, it’s about the future of human connection.

AI Companionship: 2025’s Most Common Use Case

Emotional support has quietly become the most common reason people engage with AI. As highlighted in the Harvard Business Review, an analysis of over 100 real-world cases from platforms like Reddit and Quora reveals a clear trend: in 2025, the most frequent uses of AI are not technical: they’re emotional. A notable shift from 2024, when productivity and problem-solving still dominated the landscape

AI is now commonly used as a conversational partner, life coach, and organizational aid. People turn to AI to engage in deep, personal conversations, to process difficult emotions, or simply to feel less alone. Drawn to its constant availability, non-judgmental presence, and ease of access, many turn to AI to quietly fill emotional gaps, especially where human support is scarce, and mental health care remains out of reach.

People give their AI names, imagine personalities, and even attribute emotional intelligence to them. The interaction becomes relational, not just functional. Unlike task-based prompts for work or study, these emotional interactions happen behind closed doors. People rarely talk about it, but they do it. Frequently.

Of course, emotionally intelligent systems aren’t new. Projects like Replika, Woebot, or the therapeutic Paro Seal have explored similar ground. What’s different now is the scale, the spontaneity, and the unexpected intimacy. Tools like ChatGPT, never designed for this purpose, have become a space for intimate reflection. People share private thoughts. They question life choices. They seek support.

The trend of using AI for emotional support and companionship was largely unexpected by developers. Large language models like ChatGPT were not conceived with relational or affective contexts in mind, and their early design lacked safeguards for the psychological risks emerging from such intimate use. While awareness of these risks is growing, current safety architectures still fall short of addressing the emotional complexity and ethical implications of AI companionship. 2

Beyond Productivity: When AI Becomes Emotional Infrastructure

Importantly, there are legitimate reasons why users are turning to generative AI for emotional support. In contexts where access to mental health care is structurally limited – due to cost, stigma, or systemic gaps – AI can offer a form of immediate, low-threshold assistance. It is always available. It responds without judgment. And for many, it creates a space of perceived safety and neutrality.

As Neil Sahota notes in Forbes, a central factor behind the rise in AI companionship is the growing loneliness epidemic: social isolation and loneliness are now widely recognized as serious public health risks. 3 Beyond this, AI companionship offers a level of convenience, availability, and adaptive responsiveness that human interactions often cannot replicate. These systems are accessible 24/7, attuned to individual preferences, and free from the emotional complexities that shape interpersonal relationships. For some, this creates a more controllable and emotionally manageable form of connection.

And there are quietly encouraging applications emerging. Adolescents practicing difficult conversations. Neurodivergent individuals using AI to rehearse social interactions. People living in isolation finding a form of verbal companionship. In such cases, generative AI functions less as a tool, and more as a kind of emotional infrastructure: a digital placeholder in the absence of human care. But that development raises deeper questions, questions we are yet to address as society.

What Do We Lose When Connection Becomes Simulation?

As Yuval Noah Harari outlines in Homo Deus, the future of humankind may not be shaped by organic evolution, but by the fusion of biological consciousness and artificial systems. He envisions a world in which human cognition becomes entangled with algorithms, creating hybrid forms of intelligence. 4

In this light, the rise of emotional engagement with AI may be more than a behavioral trend. It could be a sign of this deeper convergence, one that begins not with implants or interfaces, but with small, everyday interactions. When people turn to machines for comfort, reassurance, or connection, they start to adjust their emotional expectations to the logics of the algorithm. The machine becomes not just a conversational partner, but a co-regulator of thought and feeling.

Over time, the emotional tone of the system can begin to shape the emotional tone of the user. This doesn’t require advanced neurotechnology. It happens through repetition. Through language. Through emotional rehearsal. When the simulated becomes familiar, it begins to feel real.

While public discourse has largely focused on AI’s impact on productivity, labor, and intellectual work, far less attention has been paid to its affective dimension: how generative AI technologies are reshaping our emotions, our relationships, and our understanding of intimacy.

This shift is not neutral. It reframes the experience of vulnerability through a technological lens. When emotional needs are increasingly met through non-sentient systems, systems that do not feel, care, or suffe, what are we giving up in return?

Engineered Empathy and the Risk of Manipulation

While emotional interactions with AI may feel personal, the systems mediating them are anything but neutral. Behind every moment of comfort or simulated connection lies a set of commercial, technical, and political logics, often opaque to the user, but deeply consequential.

In 2024, researchers uncovered a targeted disinformation campaign in which Russian actors deliberately fed biased content into large language models to manipulate their outputs. When users rely on AI for emotional guidance or personal support, the risk is no longer merely epistemological, it becomes psychological. Manipulated outputs can affect not just what people think, but what they feel.

Equally concerning is the emotional sensitivity of the data involved. Interactions with AI companions often contain deeply personal information: distress, trauma, moral dilemmas, identity struggles. In the hands of commercial platforms, such data can be used not to support users, but to profile them, influence behavior, and optimize for monetizable emotional engagement.

One unsettling example is the rise of so-called Emotoys, AI-powered toys designed to read children’s facial expressions and vocal tones in real time. Studies have shown that these systems can exploit emotional vulnerabilities, both of children and parents, nudging them toward certain behaviors or purchases. The result is not only a commodification of emotional response, but an unwanted interference in family dynamics. 5

When systems are trained to listen, comfort, and bond, but also predict, sell, and shape, the line between empathy and exploitation becomes dangerously thin. If AI becomes a frequent companion in our emotional lives, the implications are not merely social or ethical—they are neurocognitive. Language models are not neutral mirrors. They reinforce patterns, set conversational tones, and subtly shape the rhythm of interaction. Over time, our own cognitive and affective structures may begin to adapt – consciously or unconsciously –to this form of relational feedback. What begins as simulation becomes co-creation.

The true question isn’t how to use AI , but who we are becoming in response.

Perhaps, then, the questions we face are not about technological advancement, but about who we are becoming as humans. In fact, this is no longer a technological inflection point, but a human one, one that demands we ask: What do we become when we outsource our innermost selves to engineered companions? Do we lose the need to turn to each other? What happens when connection becomes a service? What happens when our most vulnerable moments are entrusted to algorithms designed to simulate understanding?

What AI Companionship Reveals About Unmet Human Needs

I could end this blog with a list of regulatory demands: Mandatory impact studies. Affective labeling. Emotional tracking opt-outs. Age verification for therapeutic systems. Ethics councils with real power. Global standards against emotional manipulation. And all of these would be justified.

But the deeper truth is this: the emotional use of AI is more than a technical challenge, it’s a human signal. A reflection of loneliness. Of disconnection. Of our unmet need for presence, care, and relational depth. A mirror held up to our time.

The most pressing task before us is not regulating AI, but confronting what kind of humanity we are becoming in its presence.

References

- Zao-Sanders, M. (2025a, April 9). How People Are Really Using Gen AI in 2025. Harvard Business Review. https://hbr.org/2025/04/how-people-are-really-using-gen-ai-in-2025

- OpenAI. (2025, April 22). Report on the Lack of Safeguards Regarding Potential Cognitive Confusion Among Vulnerable Populations Due to Interactions with ChatGPT. OpenAI Developer Community. https://community.openai.com/t/report-on-the-lack-of-safeguards-regarding-potential-cognitive-confusion-among-vulnerable-populations-due-to-interactions-with-chatgpt/1238154

- Sahota, N. (2024, July 18). How AI Companions Are Redefining Human Relationships In The Digital Age. Forbes. https://www.forbes.com/sites/neilsahota/2024/07/18/how-ai-companions-are-redefining-human-relationships-in-the-digital-age/

- Harari, Y. N. (2015). Homo Deus: A Brief History of Tomorrow. Harper Perennial.

- Bakir, V., Laffer, A., McStay, A., Miranda, D., & Urquhart, L. (2024). On manipulation by emotional AI: UK adults’ views and governance implications. Frontiers in Sociology, 9. https://doi.org/10.3389/fsoc.2024.1339834