Would you trust Elon Musk with your brain data?

This hypothetical question is becoming less and less hypothetical. Brain-computer interfaces (BCIs) are currently advancing rapidly from science fiction to clinical reality.

BCIs are technologies designed to link our brains to machines and create direct communication pathways between the brain and external devices. These technologies range from wearable headsets with electrodes to tiny implants placed directly in the brain. They promise revolutionary applications: restoring communication for those who cannot speak, returning movement to paralysed limbs, and potentially enhancing human capabilities beyond their natural limits.

Yet this incredible potential comes with unprecedented risks. BCIs capture our neural activity, the very electrical patterns that constitute our thoughts, emotions, and intentions.

If we couldn’t regulate cookies, how will we regulate cognition?

The first time I came across Brain Computer Interfaces was about four years ago. I had just finished reading Shoshana Zuboff’s “Surveillance Capitalism”. Her work had left a lasting impression on me, especially the idea that our personal data had become raw material for profit, extracted and used without real consent. 1

Consider what happens when the most intimate data imaginable: our thoughts themselves – become subject to the same data extraction models that already exploit our digital lives.

This transition is happening faster than most realize. Neuralink has already conducted human trials. Meta is investing heavily in non-invasive BCI research. Military contractors are exploring neural interfaces for enhanced soldier performance.

What makes this particularly concerning is how these developments are unfolding within our current political and regulatory environment, where tech platforms consistently resist privacy oversight while expanding into new domains of data collection. Meanwhile, regulatory frameworks for neural data remain scattered across different agencies with no comprehensive governance approach.

This combination of rapid technological advancement and regulatory weakness creates the perfect conditions for repeating surveillance capitalism’s mistakes on an unprecedented scale. Neural data doesn’t just represent another category of personal information; it represents direct access to the biological substrate of human cognition itself.

The fundamental questions these developments raise, remain widely unanswered from previous technological waves. We have not adequately addressed the harms of social media manipulation, AI-driven decision-making, or open data exploitation. The promised benefits to society have largely failed to materialize, unless we consider personalized advertising a meaningful gain. This article aims to show how Brain-Computer Interface developments could raise serious risks if we continue along existing trajectories, without first asking the critical question: How can we ensure democratic oversight over Big Tech?

The technology is advancing faster than our ability to govern it. Without robust protections in place, we risk surrendering our most intimate cognitive processes to the same corporate structures that have already failed to safeguard our digital lives.

Brain Computer Interfaces: From Medical Miracle to Consumer Product

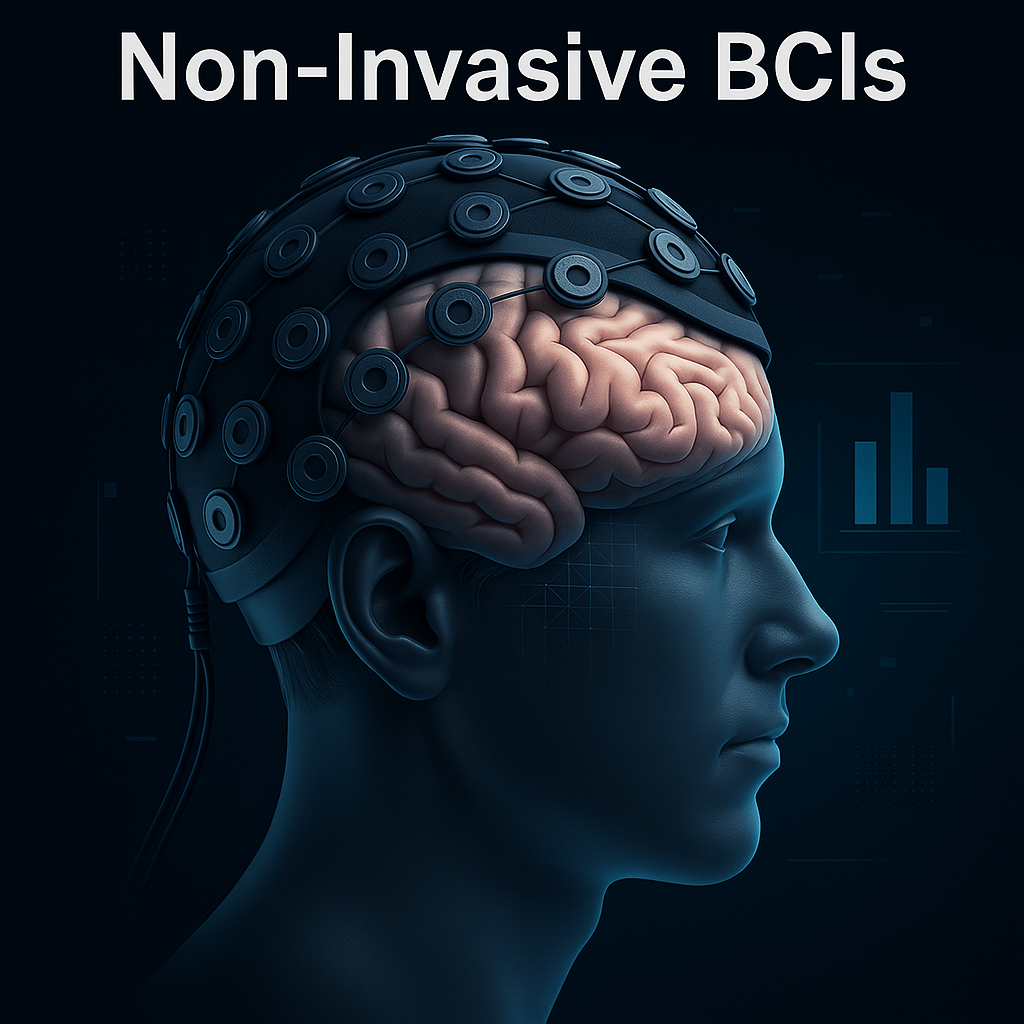

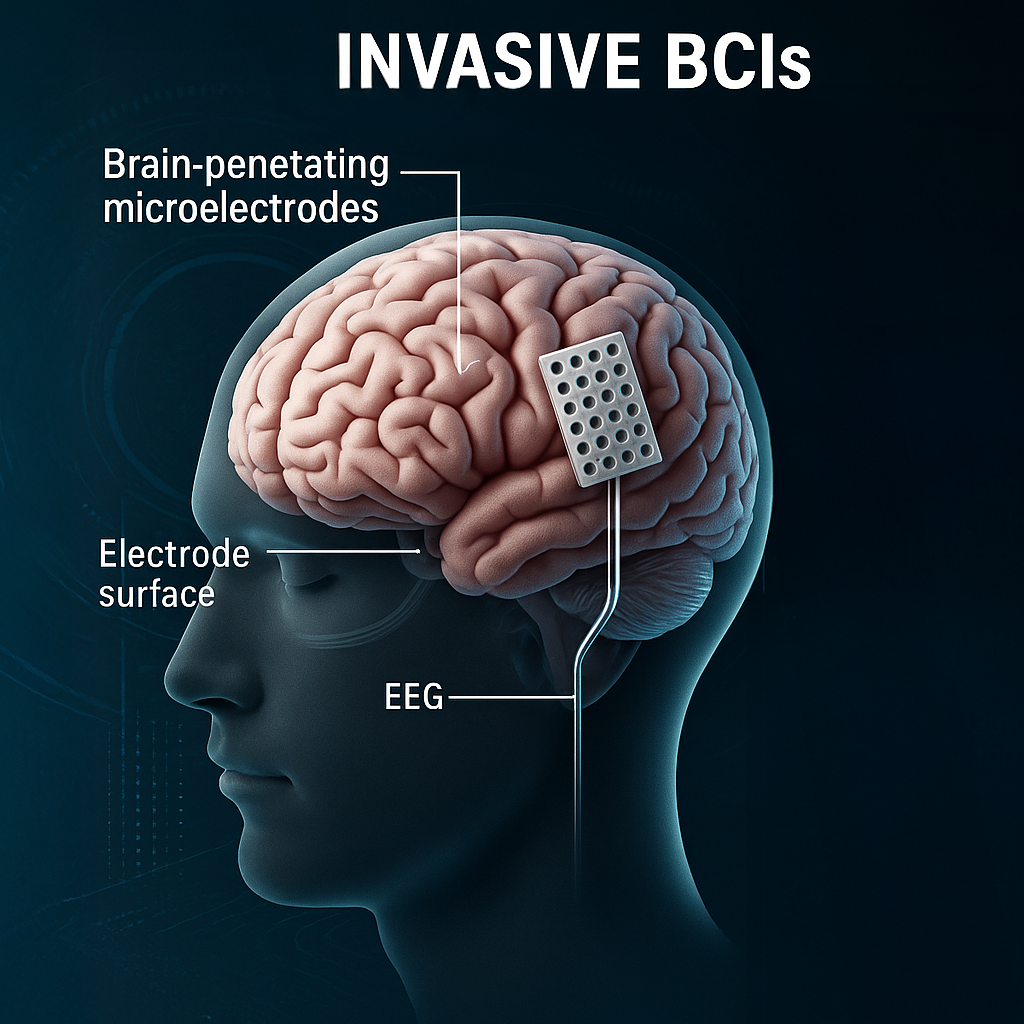

To understand the stakes, we must first understand the technology. BCIs broadly fall into two categories: non-invasive and invasive systems.

Non-invasive Brain Computer Interfaces, like brain-scanning headsets, record brain activity from outside the skull. They offer accessibility without surgical risks but provide limited resolution. This means they can detect when brain activity happens with reasonable precision, but are less accurate at determining where exactly it occurs.

Invasive Brain Computer Interfaces, by contrast, use electrodes implanted in or on the brain surface. These systems achieve dramatically higher resolution, but require neurosurgery with its attendant risks. Some BCIs are “read-only,” capturing neural activity without influencing it, while “bidirectional” systems can both record and stimulate brain activity.

The field has seen remarkable progress in recent years. In 2021, researchers led by David Moses at the University of California, San Francisco, reported in the New England Journal of Medicine a breakthrough brain to speech device (“speech neuroprosthesis”) that enabled a man with severe paralysis to communicate in sentences. The system decoded words from his brain activity with 74% accuracy at a rate of about 15 words per minute—still slower than natural speech (150 words per minute) but a dramatic improvement over previous systems. 2

Similarly, in 2017, Ajiboye and colleagues demonstrated in The Lancet the first system that combined brain signals with electrical muscle stimulation, allowing to restore both reaching and grasping movements to a person with tetraplegia. Their participant could perform functional tasks like drinking from a cup and feeding himself with a fork, activities impossible without the technology. 3

These medical breakthroughs represent just the beginning. The Brain Computer Interfaces field is expanding rapidly across multiple domains: medical, military, and commercial, each with its own adoption timeline and ethical implications.

In the medical sector, where BCIs are already in use for specific clinical cases, broader scalability is projected by 2030–2035. In the military sector, BCI research remains in early stages, with applications such as advanced soldier cognitive and physical enhancement, brain-to-brain communication, and improved situational awareness still largely experimental and without a clear timeline for widespread operational deployment. 4

Consumer-facing BCIs are also beginning to emerge, though slower in adoption. Mass-market viability is anticipated by 2040–2045. Companies like Emotiv are already introducing non-invasive EEG devices into wellness and entertainment markets, and IDTechEx projects the consumer BCI sector will surpass $1.6 billion by 2045, driven by VR integration. 5

Wearables focused on mental health and sleep tracking (e.g., brain-scanning wearables) are gaining traction, with forecasts predicting an 8.4% compound annual growth rate. However, key barriers remain: non-invasive systems still struggle with signal accuracy, while invasive systems face significant medical, regulatory, and ethical hurdles. Big Tech investments — from Meta to Neuralink — are now targeting these very challenges.

It becomes evident: Major players in the Brain Computer Interface field now include not only academic institutions like the BrainGate consortium and medical device companies like Medtronic, but also a growing number of tech giants. Meta acquired CTRL-labs, which develops non-invasive neural interfaces. Kernel is building high-resolution brain-recording helmets.6 DARPA continues to fund advanced neural research through initiatives like the Neural Engineering System Design program.7

And then there’s Neuralink.

Neuralink and the Trust Problem

Founded by Elon Musk in 2016, Neuralink represents perhaps the most ambitious and controversial BCI venture. The company’s technical approach centres on ultra-thin flexible “threads” (smaller than human hair) studded with electrodes that can be inserted into brain tissue. Their current N1 implant contains 1,024 electrodes, far more than traditional systems, and transmits data wirelessly. 8

After years of development and animal testing, Neuralink received FDA approval for human trials in 2023 and implanted its first human participant in January 2024. The company’s stated medical goals include treating conditions like paralysis and blindness. But Musk’s ambitions extend far beyond medical applications to what he calls “a Fitbit in your skull”9and ultimately a “merger with artificial intelligence”. 10

This is where the trust question becomes critical. Musk’s history with regulation across his companies reveals a consistent pattern: move fast, push boundaries, and ask for forgiveness rather than permission. At Twitter (now X), he dismantled content moderation teams and challenged regulatory frameworks. Tesla’s Autopilot system has faced multiple investigations over safety concerns and misleading marketing. SpaceX has repeatedly clashed with the FAA over launch approvals and safety protocols.

Neuralink itself has faced scrutiny over its animal testing practices, with reports of unnecessary animal suffering and deaths prompting federal investigations.11 The company’s human trials have raised questions about informed consent protocols and long-term monitoring plans. Most concerning is the lack of transparency regarding data usage policies—who owns the neural data collected, how it might be used, and what protections participants have. 12

When Musk speaks of a “merger with AI” as a “defensive necessity,” 13 we must ask: what vision of humanity’s future is driving this development? What kind of society are we becoming if thought itself is privatised? As Shoshana Zuboff argues in her analysis of surveillance capitalism, we have already seen how behavioural data has been commodified by tech companies. Neural data represents the ultimate extension of this extractive model: not just what we do, but what we think and feel.

Can BCIs Be Used for Manipulation or Propaganda?

The potential for BCIs to be used for manipulation is not speculative. Military and intelligence agencies have long shown interest in neural interface technology. NATO and its member states are actively exploring brain-computer interface (BCI) technologies through research initiatives aimed at enhancing military capabilities.14 In the US, the Defense Advanced Research Projects Agency (DARPA) has funded multiple programs exploring BCIs for applications ranging from treating PTSD to enhancing soldier performance. The dual-use nature of this research, potentially beneficial for treating conditions like depression but also applicable to influence operations, demands careful oversight. 15

Bidirectional BCIs that can stimulate as well as record brain activity pose particular concerns. Research has already demonstrated that targeted neural stimulation can influence decision-making processes and emotional states. As Klein and Rubel (2020) note in their analysis of BCI ethics, “The ability to write information to the brain raises profound questions about autonomy and agency.”16

Technical safeguards against unauthorized stimulation, transparency requirements for bidirectional systems, and user control mechanisms are essential. Yuste and colleagues (2017) emphasize that preserving agency, our capacity to act according to our own intentions, must be a central priority in BCI development. Without such protections, these technologies could enable unprecedented forms of cognitive coercion.17

Who Gets Access? Who Gets Used?

The global distribution of BCI research and development reveals stark disparities. The vast majority of neurotechnology companies and research institutions are concentrated in North America, Europe, and East Asia. High costs and infrastructure requirements create significant barriers to access for much of the world’s population.

These disparities raise serious ethical concerns about who benefits from and who bears the risks of BCI development. Clinical trials often recruit from vulnerable populations who may have few other treatment options. Goering and colleagues (2021) emphasize the importance of inclusive research practices that ensure “the benefits and burdens of neurotechnology are distributed justly.” 18

There are also legitimate concerns about technological colonialism: the export of Western/Northern values embedded in neurotechnology design and implementation. Different cultures have varying conceptions of privacy, personhood, and the relationship between mind and body. When BCIs developed primarily in Western contexts are deployed globally, they risk imposing particular cultural frameworks on diverse populations.

A human rights-based approach to BCI development would emphasize both the right to benefit from scientific progress and the right to protection from exploitation. It would ensure equitable access to therapeutic applications while guarding against the commodification of neural data.

Who Decides What a “Person” Is?

One of the most profound questions raised by BCIs concern our understanding of personhood itself. Different cultural traditions have vastly different conceptions of mind and consciousness. Western individualistic frameworks tend to locate thought and identity within the individual brain, while many Indigenous perspectives emphasize more collective understandings of cognition. Religious and philosophical traditions worldwide offer diverse accounts of the relationship between brain, mind, and self.

These varying perspectives have significant implications for BCI ethics. How we understand neural privacy, cognitive enhancement, or the boundaries of the self will be shaped by cultural frameworks. Yuste and colleagues (2017) highlight the importance of preserving identity—our sense of self and continuity—as neurotechnology advances.

Inclusive ethics requires bringing diverse perspectives into governance bodies and policy discussions. The risks of imposing a single cultural framework on neurotechnology development are substantial—from misunderstanding user needs to perpetuating historical injustices. As Goering and colleagues (2021) argue, “Neuroethics must be a global conversation that includes voices from different disciplines, cultures, and lived experiences”.

A Global Concern: Digital Peace at Stake

The implications of BCI technology extend far beyond individual privacy to fundamental questions of digital peace:

First, neural data is uniquely intimate.

As Yuste and colleagues (2017) emphasize, brain data can reveal patterns of thought, emotion, and intention that precede action—making it more sensitive than any other form of personal data. Yet, current legal frameworks and medical device regulations remain focused on physical safety, not the informational and psychological risks BCIs introduce. Data protection laws like the GDPR and CCPA were not designed for the depth and sensitivity of neural data, which could expose not just what we choose to share but our unfiltered thoughts and subconscious responses.

Second, new rights and regulatory gaps are emerging.

Scholars such as Ienca and Andorno (2017) have proposed “neurorights,” including the right to mental privacy and cognitive liberty.19 Chile has led the way by amending its constitution to protect neural identity, but most countries lack comprehensive laws addressing these challenges.20 The regulatory landscape remains fragmented, and there is a growing call for international standards and the application of the precautionary principle.

Third, BCIs raise profound questions about autonomy, agency, and identity.

Bidirectional BCIs, which can both read and stimulate brain activity, introduce the possibility of influencing users’ intentions or actions. This blurs the line between human agency and machine input, raising concerns about free will, personal responsibility, and even changes in self-perception or identity.

Fourth, security and accountability are urgent issues.

Neural devices are vulnerable to cyberattacks, making the protection of neural data not just a privacy concern but a matter of personal safety. There is also uncertainty around legal and moral responsibility if a device malfunctions or is compromised—who is accountable for the consequences?

Fifth, equity and social inclusion must be prioritized.

As with many advanced technologies, early access to BCIs is likely to be limited to wealthier nations and privileged groups, risking the amplification of existing social inequalities. At the same time, BCIs offer transformative benefits for people with disabilities, making equitable access a matter of social justice and human rights.

Finally, BCIs introduce new geopolitical and security risks.

Cross-border data flows, technological sovereignty, and the potential for BCIs to be used in cognitive warfare or manipulation are now part of the international policy conversation. Without coordinated global governance, protections may depend on geography, and the risk of fragmentation remains high.

In summary: BCIs challenge our existing notions of privacy, autonomy, security, and equity. The field is advancing rapidly, but the ethical, legal, and social frameworks needed to guide its development and protect human rights are still catching up. Addressing these issues is essential to ensure that the promise of BCIs does not come at the expense of digital peace and fundamental freedoms.

The Battle for the Brain: Conclusion

As Rafael Yuste and colleagues warned in their landmark 2017 paper in Nature, “Brain-recording technology is advancing so quickly that we need ethical guidelines before devices become widely available.” Six years later, those guidelines remain incomplete while commercial development accelerates.

So, would you trust Elon Musk with your brain data? The question is not merely about one entrepreneur or company, but about the frameworks we establish for this critical technology. The battle for the brain is ultimately about who controls the future of human cognition itself.

A vision of cognitive sovereignty would ensure individual control over neural data, democratic governance of neurotechnology, equitable access to beneficial applications, and robust protections against exploitation and manipulation. It would recognize that brain data is not just another commodity but something fundamental to our humanity.

But, the harsh reality is that traditional governance approaches have been thoroughly discredited by recent history. We had congressional hearings for Facebook, implemented GDPR for data protection, established AI ethics boards—and yet Big Tech has only grown more powerful, surveillance more pervasive, and democratic manipulation more sophisticated. The UN is weaker than ever, while tech giants operate with more influence than most nation-states. The future of human cognition may still be ours to defend – but we have no clear path forward, and time is running out

We stand at a rare moment: BCI technology is advanced enough to matter, but not yet so entrenched that meaningful governance is impossible.

The pessimistic view—that we’ll simply repeat surveillance capitalism’s mistakes with neural data—ignores what we’ve learned from those very mistakes. Unlike the early internet era, we now understand the patterns of tech capture: the promises of self-regulation, the “move fast and break things” mentality, the gradual normalization of surveillance. This knowledge is power.

Three principles must guide our approach:

- Data Sovereignty: Our sensitive brain data should belong to us, not to any company. We need technical standards requiring end-to-end encryption of neural data, user control over data retention and deletion, and legal frameworks treating neural information as fundamentally different from other personal data. Chile’s constitutional amendment protecting neural identity points the way forward.

- Democratic Participation: BCI development cannot be left to corporate boardrooms and military contractors. We need public input in research priorities, transparent clinical trial protocols, and citizen oversight of dual-use applications. The stakes are too high for decisions to be made behind closed doors.

- Equitable Access: The transformative benefits of BCIs for people with disabilities must not become privileges of the wealthy. Public research funding, insurance coverage for therapeutic applications, and international technology transfer can prevent BCIs from amplifying existing inequalities.

The path forward requires coordinated action: Researchers are already building privacy-preserving neural interfaces. Patient advocacy groups are demanding meaningful consent protocols. Policymakers in multiple countries are developing neural rights frameworks. These efforts need amplification, not abandonment.

The battle for the brain is not predetermined. It depends on whether we choose informed engagement over resigned acceptance. Corporate capture of our digital lives was not inevitable—it happened because we collectively chose convenience over agency. We can choose differently this time.

The frameworks exist. The knowledge exists. The momentum is building. The question is whether we’ll seize this moment or let it slip away.

It’s time to rethink the future we’re building.

References

- Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the new frontier of power. Public Affairs.

- Moses, D. A., Metzger, S. L., Liu, J. R., Anumanchipalli, G. K., Makin, J. G., Sun, P. F., Chartier, J., Dougherty, M. E., Liu, P. M., Abrams, G. M., Tu-Chan, A., Ganguly, K., & Chang, E. F. (2021). Neuroprosthesis for Decoding Speech in a Paralyzed Person with Anarthria. New England Journal of Medicine, 385(3), 217–227. https://doi.org/10.1056/nejmoa2027540

- Ajiboye, A. B., Willett, F. R., Young, D. R., Memberg, W. D., Murphy, B. A., Miller, J. P., Walter, B. L., Sweet, J. A., Hoyen, H. A., Keith, M. W., Peckham, P. H., Simeral, J. D., Donoghue, J. P., Hochberg, L. R., & Kirsch, R. F. (2017). Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration. The Lancet, 389(10081), 1821–1830. https://doi.org/10.1016/s0140-6736(17)30601-3

- Binnendijk, A., Marler, T. & Bartels, E., & . (2020). Brain-Computer Interfaces. An initial assessment. https://www.rand.org/content/dam/rand/pubs/research_reports/RR2900/RR2996/RAND_RR2996.pdf

- Skyrme, T. (2024, July 31). Brain Computer Interfaces 2025-2045: Technologies, Players, Forecasts. IDTechEx. https://www.idtechex.com/en/research-report/brain-computer-interfaces/1024

- Statt, N. (2019, September 23). Facebook acquires neural interface startup CTRL-Labs for its mind-reading wristband. The Verge. https://www.theverge.com/2019/9/23/20881032/facebook-ctrl-labs-acquisition-neural-interface-armband-ar-vr-deal

- Defense Advanced Research Projects Agency (DARPA). (2025). Neural Engineering System Design (NESD). Darpa.mil. https://www.darpa.mil/research/programs/neural-engineering-system-design

- Neuralink. (2023, September 19). Neuralink’s First-in-Human Clinical Trial is Open for Recruitment | Blog. Neuralink. https://neuralink.com/blog/first-clinical-trial-open-for-recruitment/

- Neuralink. (2020). Neuralink Progress Update, Summer 2020 [YouTube Video]. In YouTube. https://www.youtube.com/watch?v=DVvmgjBL74w

- Rogan, J. (2020). Joe Rogan Experience #1470 – Elon Musk [YouTube Video]. In YouTube. https://www.youtube.com/watch?v=RcYjXbSJBN8

- Vanian, J. (2022). Elon Musk’s brain-implant startup is being accused of abusing monkeys. Fortune. https://fortune.com/2022/02/09/elon-musks-neuralink-brain-implant-startup-monkeys-animal-mistreatment-complaint/

- Lavazza, A., Balconi, M., Ienca, M., Minerva, F., Pizzetti, F. G., Reichlin, M., Samorè, F., Sironi, V. A., Navarro, M. S., & Songhorian, S. (2025). Neuralink’s brain-computer interfaces: Medical Innovations and Ethical Challenges. Frontiers in Human Dynamics, 7. https://doi.org/10.3389/fhumd.2025.1553905

- Arjun Kharpal. (2017, February 13). Elon Musk: Humans must merge with machines or become irrelevant in AI age. CNBC; CNBC. https://www.cnbc.com/2017/02/13/elon-musk-humans-merge-machines-cyborg-artificial-intelligence-robots.html

- NATO Science and Technology Organization. (2025). Collaborative Programme of Work 2025. https://www.sto.nato.int/public/20250210_UC_IKM_NATO-science-and-technology-organization-2025-collaborative-programme-of-work.pdf

- Hensing, J., & Faika, A. (2025). Neurotechnology: A New Frontier for Prosperity and Security in Germany and Europe? Gppi.net. https://gppi.net/2025/03/27/neurotechnology-a-new-frontier-for-prosperity-and-security-in-germany-and-europe

- Klein, E., & Rubel, A. (2018). Privacy and ethics in brain-computer interface research. Philpapers.org. https://philpapers.org/rec/KLEPAE-4

- Yuste, R., Goering, S., Arcas, B. A. y, Bi, G., Carmena, J. M., Carter, A., Fins, J. J., Friesen, P., Gallant, J., Huggins, J. E., Illes, J., Kellmeyer, P., Klein, E., Marblestone, A., Mitchell, C., Parens, E., Pham, M., Rubel, A., Sadato, N., & Sullivan, L. S. (2017). Four ethical priorities for neurotechnologies and AI. Nature, 551(7679), 159–163. https://doi.org/10.1038/551159a

- Goering, S., Klein, E., Specker Sullivan, L., Wexler, A., Agüera y Arcas, B., Bi, G., Carmena, J. M., Fins, J. J., Friesen, P., Gallant, J., Huggins, J. E., Kellmeyer, P., Marblestone, A., Mitchell, C., Parens, E., Pham, M., Rubel, A., Sadato, N., Teicher, M., & Wasserman, D. (2021). Recommendations for Responsible Development and Application of Neurotechnologies. Neuroethics, 14(3), 365–386. https://doi.org/10.1007/s12152-021-09468-6

- Ienca, M., & Andorno, R. (2017). Towards new human rights in the age of neuroscience and neurotechnology. Life Sciences, Society and Policy, 13(1). https://doi.org/10.1186/s40504-017-0050-1

- Dayton, L. (2021, March 16). Call for human rights protections on emerging brain-computer interface technologies. Nature Index. https://www.nature.com/nature-index/news/human-rights-protections-artificial-intelligence-neurorights-brain-computer-interface

his research article examines the profound implications of Neuralink’s brain-computer interface (BCI) technology on religious and psychological experiences. As BCIs advance toward direct neural interfacing, they present unprecedented opportunities and challenges for human spirituality, cognition, and self-understanding. Drawing on interdisciplinary research, we investigate the potential for technologically-mediated spiritual experiences and their impact on traditional religious practices and institutions. The study explores ethical considerations surrounding cognitive liberty, mental privacy, and the authenticity of BCI-induced experiences. Key findings indicate that BCIs could potentially induce or enhance altered states of consciousness associated with spiritual experiences, augment meditation practices, and redefine religious rituals. However, these capabilities raise significant ethical concerns, including issues of cognitive manipulation and equitable access. The research also highlights potential shifts in religious authority structures and the emergence of new techno-spiritual philosophies. By analyzing the societal and cultural impacts of widespread BCI adoption, this study provides a nuanced understanding of how Neuralink’s technology may reshape the landscape of human consciousness and spirituality. The article contributes to the critical dialogue on the future of religious and psychological experiences in an era of advancing neurotechnology, balancing the transformative potential of BCIs with careful consideration of their ethical implications and philosophical ramifications.

Dear Christopher, thanks for sharing this abstract. Would you mind sharing the link to the article? I am wondering how you’ve been able to derive to conclusions of Neuralink’s BCIs in religious and psychological experiences particularly?